Tech

Adobe terms clarified: Will never own your work, or use it to train AI

A change to Adobe terms set the internet alight yesterday, after a number of pro users of the company’s apps reacted with anger and confusion to the scary-looking wording.

The company initially issued a rather dismissive statement that its terms have been in place for years and it was merely clarifying them, but subsequently wrote a blog post which tackled the issue in more detail …

The controversy over the updated Adobe terms

Adobe Creative Cloud users opened their apps yesterday to find that they were forced to agree to new terms, which included some frightening-sounding language. It seemed to suggest Adobe was claiming rights over their work.

Worse, there was no way to continue using the apps, to request support to clarify the terms, or even uninstall the apps, without agreeing to the terms.

A number of high-profile pros didn’t hold back in expressing their opinions of this.

We noted at the time that possible explanations included things like generating thumbnails of customer work, and CSAM scanning – and the company has confirmed that both apply.

Initial Adobe statement

When we requested comment from Adobe, the company’s initial statement didn’t really help, thanks to a dismissive ‘nothing to see here, move along’ tone.

This policy has been in place for many years. As part of our commitment to being transparent with our customers, we added clarifying examples earlier this year to our Terms of Use regarding when Adobe may access user content. Adobe accesses user content for a number of reasons, including the ability to deliver some of our most innovative cloud-based features, such as Photoshop Neural Filters and Remove Background in Adobe Express, as well as to take action against prohibited content. Adobe does not access, view or listen to content that is stored locally on any user’s device.

Subsequent explanation

However, the company did subsequently realise the issue wasn’t going to go away until it offered a proper explanation. It did so through a blog post.

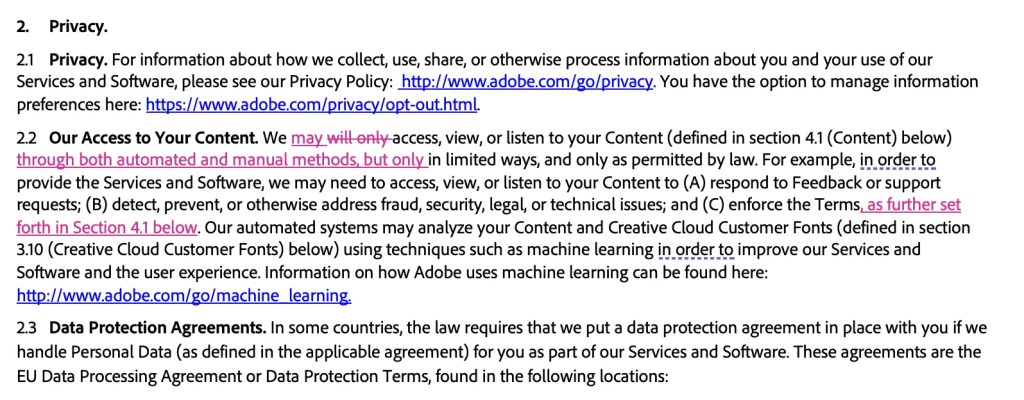

The company offered a general explanation, that it wanted to be transparent about the content checks it performs, along with a change-log which highlighted the amendments.

The focus of this update was to be clearer about the improvements to our moderation processes that we have in place. Given the explosion of Generative AI and our commitment to responsible innovation, we have added more human moderation to our content submissions review processes.

The highlighted changes reflect the fact that Adobe now uses manual as well as automated scanning. Specifically, automated flagging will then be escalated for human review.

They go on to specify that this review is for CSAM, as well as app usage which breaks the company’s terms of use – such as for spamming, or hosting adult content outside of the area designated for this.

The company also confirmed that thumbnail creation is one reason for the terms.

Finally, it offered two key assurances.

- Adobe does not train Firefly Gen AI models on customer content. Firefly generative AI models are trained on a dataset of licensed content, such as Adobe Stock, and public domain content where copyright has expired. Read more here: https://helpx.adobe.com/firefly/faq.html#training-data

- Adobe will never assume ownership of a customer’s work. Adobe hosts content to enable customers to use our applications and services. Customers own their content and Adobe does not assume any ownership of customer work.

It said that it will be “clarifying the Terms of Use acceptance customers see when opening applications.”

Photo by Emily Bernal on Unsplash

FTC: We use income earning auto affiliate links. More.

:max_bytes(150000):strip_icc()/roundup-writereditor-loved-deals-tout-f5de51f85de145b2b1eb99cdb7b6cb84.jpg)